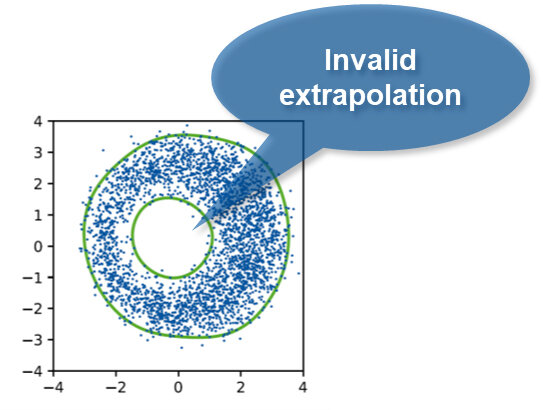

Is my prediction valid? – Data-driven models cannot extrapolate and often make false predictions!

Artificial neural networks and other data-driven models are increasingly used in engineering to learn underlying relationships between inputs and outputs. A critical issue of data-driven models is their limited extrapolability. In other words, data-driven models can only be valid within the region where they have sufficiently dense coverage of training data points. Unfortunately, data from experimental or industrial setups is often not evenly distributed leading to clusters and holes in the training data domain. The identification of these holes is crucial because model evaluations in this region can be invalid. In addition, it is important to obey the validity domain in optimization or process operation.

In our recent paper, we use topological data analysis to identify holes in training data sets. Then, we use a one-class classification approach to learn the (nonconvex) validity domain of data-driven models. The one-class classifiers can also be used within optimization approaches to impose validity constraints. This approach improved the reliability of data-driven models and safety of processes.

Reference

Schweidtmann, A. M., Weber, J. M., Wende, C., Netze, L., & Mitsos, A. (2021). Obey validity limits of data-driven models through topological data analysis and one-class classification. Optimization and Engineering, 1-22.